Self-organization is a process of attraction and repulsion in which the internal organization of a system, normally an open system, increases in complexity without being guided or managed by an outside source. Self-organizing systems typically (but not always) display emergent properties.

Overview

The most robust and unambiguous examples of self-organizing systems are from physics. Self-organization is also relevant in chemistry, where it has often been taken as being synonymous with self-assembly. The concept of self-organization is central to the description of biological systems, from the subcellular to the ecosystem level. There are also cited examples of "self-organizing" behaviour found in the literature of many other disciplines, both in the natural sciences and the social sciences such as economics or anthropology. Self-organization has also been observed in mathematical systems such as cellular automata.

Sometimes the notion of self-organization is conflated with that of the related concept of emergence. Properly defined, however, there may be instances of self-organization without emergence and emergence without self-organization, and it is clear from the literature that the phenomena are not the same. The link between emergence and self-organization remains an active research question.

Self-organization usually relies on four basic ingredients:

- Balance of exploitation and exploration

- Multiple interactions

History of the idea

The idea that the dynamics of a system can tend by themselves to increase the inherent order of a system has a long history. One of the earliest statements of this idea was by the philosopher Descartes, in the fifth part of his Discourse on Method, where he presents it hypothetically. Descartes further elaborated on the idea at great length in his unpublished work The World.

The ancient atomists (among others) believed that a designing intelligence was unnecessary, arguing that given enough time and space and matter, organization was ultimately inevitable, although there would be no preferred tendency for this to happen. What Descartes introduced was the idea that the ordinary laws of nature tend to produce organization (For related history, see Aram Vartanian, Diderot and Descartes).

Beginning with the 18th century naturalists a movement arose that sought to understand the "universal laws of form" in order to explain the observed forms of living organisms. Because of its association with Lamarckism, their ideas fell into disrepute until the early 20th century, when pioneers such as D'Arcy Wentworth Thompson revived them. The modern understanding is that there are indeed universal laws (arising from fundamental physics and chemistry) that govern growth and form in biological systems.

Originally, the term "self-organizing" was used by Immanuel Kant in his Critique of Judgment, where he argued that teleology is a meaningful concept only if there exists such an entity whose parts or "organs" are simultaneously ends and means. Such a system of organs must be able to behave as if it has a mind of its own, that is, it is capable of governing itself.

| “ | In such a natural product as this every part is thought as owing its presence to the agency of all the remaining parts, and also as existing for the sake of the others and of the whole, that is as an instrument, or organ... The part must be an organ producing the other parts—each, consequently, reciprocally producing the others... Only under these conditions and upon these terms can such a product be an organized and self-organized being, and, as such, be called a physical end. | ” |

The term "self-organizing" was introduced to contemporary science in 1947 by the psychiatrist and engineer W. Ross Ashby. It was taken up by the cyberneticians Heinz von Foerster, Gordon Pask, Stafford Beer and Norbert Wiener himself in the second edition of his "Cybernetics: or Control and Communication in the Animal and the Machine" (MIT Press 1961).

Self-organization as a word and concept was used by those associated with general systems theory in the 1960s, but did not become commonplace in the scientific literature until its adoption by physicists and researchers in the field of complex systems in the 1970s and 1980s. After 1977's Ilya Prigogine Nobel Prize, the thermodynamic concept of self-organization received some attention of the public, and scientific researchers start to migrate from the cibernetic view to the thermodynamic view.

Examples

The following list summarizes and classifies the instances of self-organization found in different disciplines. As the list grows, it becomes increasingly difficult to determine whether these phenomena are all fundamentally the same process, or the same label applied to several different processes. Self-organization, despite its intuitive simplicity as a concept, has proven notoriously difficult to define and pin down formally or mathematically, and it is entirely possible that any precise definition might not include all the phenomena to which the label has been applied.

It should also be noted that, the farther a phenomenon is removed from physics, the more controversial the idea of self-organization as understood by physicists becomes. Also, even when self-organization is clearly present, attempts at explaining it through physics or statistics are usually criticized as reductionistic.

Similarly, when ideas about self-organization originate in, say, biology or social science, the farther one tries to take the concept into chemistry, physics or mathematics, the more resistance is encountered, usually on the grounds that it implies direction in fundamental physical processes. However the tendency of hot bodies to get cold (see Thermodynamics) and by Le Chatelier's Principle- the statistical mechanics extension of Newton's Third Law- to oppose this tendency should be noted.

Self-organization in physics

There are several broad classes of physical processes that can be described as self-organization. Such examples from physics include:

- structural (order-disorder, first-order) phase transitions, and spontaneous symmetry breaking such as

- spontaneous magnetization, crystallization (see crystal growth, and liquid crystal) in the classical domain and

- the laser, superconductivity and Bose-Einstein condensation, in the quantum domain (but with macroscopic manifestations)

- second-order phase transitions, associated with "critical points" at which the system exhibits scale-invariant structures. Examples of these include:

- critical opalescence of fluids at the critical point

- percolation in random media

- structure formation in thermodynamic systems away from equilibrium. The theory of dissipative structures of Prigogine and Hermann Haken's Synergetics were developed to unify the understanding of these phenomena, which include lasers, turbulence and convective instabilities (e.g., Bénard cells) in fluid dynamics,

- structure formation in astrophysics and cosmology (including star formation, galaxy formation)

- reaction-diffusion systems, such as Belousov-Zhabotinsky reaction

- self-organizing dynamical systems: complex systems made up of small, simple units connected to each other usually exhibit self-organization

- Self-organized criticality (SOC)

- In spin foam system and loop quantum gravity that was proposed by Lee Smolin. The main idea is that the evolution of space in time should be robust in general. Any fine-tuning of cosmological parameters weaken the independency of the fundamental theory. Philosophically, it can be assumed that in the early time, there has not been any agent to tune the cosmological parameters. Smolin and his colleagues in a series of works show that, based on the loop quantization of spacetime, in the very early time, a simple evolutionary model (similar to the sand pile model) behaves as a power law distribution on both the size and area of avalanche.

- Although, this model, which is restricted only on the frozen spin networks, exhibits a non-stationary expansion of the universe. However, it is the first serious attempt toward the final ambitious goal of determining the cosmic expansion and inflation based on a self-organized criticality theory in which the parameters are not tuned, but instead are determined from within the complex system.

Self-organization vs. entropy

Statistical mechanics informs us that large scale phenomena can be viewed as a large system of small interacting particles, whose processes are assumed consistent with well established mechanical laws such as entropy, i.e., equilibrium thermodynamics. However, “… following the macroscopic point of view the same physical media can be thought of as continua whose properties of evolution are given by phenomenological laws between directly measurable quantities on our scale, such as, for example, the pressure, the temperature, or the concentrations of the different components of the media. The macroscopic perspective is of interest because of its greater simplicity of formalism and because it is often the only view practicable.” Against this background, Glansdorff and Ilya Prigogine introduced a deeper view at the microscopic level, where “… the principles of thermodynamics explicitly make apparent the concept of irreversibility and along with it the concept of dissipation and temporal orientation which were ignored by classical (or quantum) dynamics, where the time appears as a simple parameter and the trajectories are entirely reversible.”

As a result, processes considered part of thermodynamically open systems, such as biological processes that are constantly receiving, transforming and dissipating chemical energy (and even the earth itself which is constantly receiving and dissipating solar energy), can and do exhibit properties of self organization far from thermodynamic equilibrium.

A LASER (acronym for “light amplification by stimulated emission of radiation”) can also be characterized as a self organized system to the extent that normal states of thermal equilibrium characterized by electromagnetic energy absorption are stimulated out of equilibrium in a reverse of the absorption process. “If the matter can be forced out of thermal equilibrium to a sufficient degree, so that the upper state has a higher population than the lower state (population inversion), then more stimulated emission than absorption occurs, leading to coherent growth (amplification or gain) of the electromagnetic wave at the transition frequency.”

Self-organization in chemistry

Self-organization in chemistry includes:

- reaction-diffusion systems and oscillating chemical reactions

- autocatalytic networks

- microphase separation of block copolymers

Self-organization in biology

According to Scott Camazine.. [et al.]:

| “ | In biological systems self-organization is a process in which pattern at the global level of a system emerges solely from numerous interactions among the lower-level components of the system. Moreover, the rules specifying interactions among the system's components are executed using only local information, without reference to the global pattern. | ” |

The following is an incomplete list of the diverse phenomena which have been described as self-organizing in biology.

- spontaneous folding of proteins and other biomacromolecules

- formation of lipid bilayer membranes

- homeostasis (the self-maintaining nature of systems from the cell to the whole organism)

- pattern formation and morphogenesis, or how the living organism develops and grows. See also embryology.

- the coordination of human movement, e.g. seminal studies of bimanual coordination by Kelso

- the creation of structures by social animals, such as social insects (bees, ants, termites), and many mammals

- flocking behaviour (such as the formation of flocks by birds, schools of fish, etc.)

- the origin of life itself from self-organizing chemical systems, in the theories of hypercycles and autocatalytic networks

- the organization of Earth's biosphere in a way that is broadly conducive to life (according to the controversial Gaia hypothesis)

Self-organization in mathematics and computer science

As mentioned above, phenomena from mathematics and computer science such as cellular automata, random graphs, and some instances of evolutionary computation and artificial life exhibit features of self-organization. In swarm robotics, self-organization is used to produce emergent behavior. In particular the theory of random graphs has been used as a justification for self-organization as a general principle of complex systems. In the field of multi-agent systems, understanding how to engineer systems that are capable of presenting self-organized behavior is a very active research area.

Self-organization in cybernetics

Wiener regarded the automatic serial identification of a black box and its subsequent reproduction as sufficient to meet the condition of self-organization. The importance of phase locking or the "attraction of frequencies", as he called it, is discussed in the 2nd edition of his "Cybernetics". Drexler sees self-replication as a key step in nano and universal assembly.

By contrast, the four concurrently connected galvanometers of W. Ross Ashby's homeostat hunt, when perturbed, to converge on one of many possible stable states. Ashby used his state counting measure of variety to describe stable states and produced the "Good Regulator" theorem which requires internal models for self-organized endurance and stability.

Warren McCulloch proposed "Redundancy of Potential Command" as characteristic of the organization of the brain and human nervous system and the necessary condition for self-organization.

Heinz von Foerster proposed Redundancy, R = 1- H/Hmax , where H is entropy. In essence this states that unused potential communication bandwidth is a measure of self-organization.

In the 1970s Stafford Beer considered this condition as necessary for autonomy which identifies self-organization in persisting and living systems. Using Variety analyses he applied his neurophysiologically derived recursive Viable System Model to management. It consists of five parts: the monitoring of performance of the survival processes (1), their management by recursive application of regulation (2), homeostatic operational control (3) and development (4) which produce maintenance of identity (5) under environmental perturbation. Focus is prioritized by an "algedonic loop" feedback: a sensitivity to both pain and pleasure.

In the 1990s Gordon Pask pointed out von Foerster's H and Hmax were not independent and interacted via countably infinite recursive concurrent spin processes (he favoured the Bohm interpretation) which he called concepts (liberally defined in any medium, "productive and, incidentally reproductive"). His strict definition of concept "a procedure to bring about a relation" permitted his theorem "Like concepts repel, unlike concepts attract" to state a general spin based Principle of Self-organization. His edict, an exclusion principle, "There are No Doppelgangers" means no two concepts can be the same (all interactions occur with different perpectives making time incommensurable for actors). This means, after sufficient duration as differences assert, all concepts will attract and coalesce as pink noise and entropy increases (and see Big Crunch, self-organized criticality). The theory is applicable to all organizationally closed or homeostatic processes that produce endurance and coherence (also in the sense of Reshcher Coherence Theory of Truth with the proviso that the sets and their members exert repulsive forces at their boundaries) through interactions: evolving, learning and adapting.

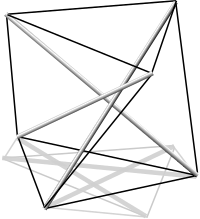

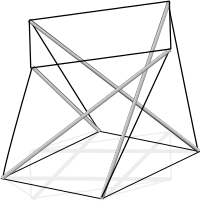

Pask's Interactions of actors "hard carapace" model is reflected in some of the ideas of emergence and coherence. It requires a knot emergence topology that produces radiation during interaction with a unit cell that has a prismatic tensegrity structure. Laughlin's contribution to emergence reflects some of these constraints.

Self-organization in human society

The self-organizing behaviour of social animals and the self-organization of simple mathematical structures both suggest that self-organization should be expected in human society. Tell-tale signs of self-organization are usually statistical properties shared with self-organizing physical systems (see Zipf's law, power law, Pareto principle). Examples such as Critical Mass, herd behaviour, groupthink and others, abound in sociology, economics, behavioral finance and anthropology.

In social theory the concept of self-referentiality has been introduced as a sociological application of self-organization theory by Niklas Luhmann (1984). For Luhmann the elements of a social system are self-producing communications, i.e. a communication produces further communications and hence a social system can reproduce itself as long as there is dynamic communication. For Luhmann human beings are sensors in the environment of the system. Luhmann put forward a functional theory of society.

Self-organization in human and computer networks can give rise to a decentralized, distributed, self-healing system, protecting the security of the actors in the network by limiting the scope of knowledge of the entire system held by each individual actor. The Underground Railroad is a good example of this sort of network. The networks that arise from drug trafficking exhibit similar self-organizing properties. Parallel examples exist in the world of privacy-preserving computer networks such as Tor. In each case, the network as a whole exhibits distinctive synergistic behavior through the combination of the behaviors of individual actors in the network. Usually the growth of such networks is fueled by an ideology or sociological force that is adhered to or shared by all participants in the network.

In economics

In economics, a market economy is sometimes said to be self-organizing. Friedrich Hayek coined the term catallaxy to describe a "self-organizing system of voluntary co-operation," in regard to capitalism. Most modern economists hold that imposing central planning usually makes the self-organized economic system less efficient. By contrast, some socialist economists consider that market failures are so significant that self-organization produces bad results and that the state should direct production and pricing. Many economists adopt an intermediate position and recommend a mixture of market economy and command economy characteristics (sometimes called a mixed economy). When applied to economics, the concept of self-organization can quickly become ideologically-imbued (as explained in chapter 5 of A. Marshall, The Unity of Nature, Imperial College Press, 2002).

In collective intelligence

Non-thermodynamic concepts of entropy and self-organization have been explored by many theorists. Cliff Joslyn and colleagues and their so-called "global brain" projects. Marvin Minsky's "Society of Mind" and the no-central editor in charge policy of the open sourced internet encyclopedia, called Wikipedia, are examples of applications of these principles - see collective intelligence.

Donella Meadows, who codified twelve leverage points that a self-organizing system could exploit to organize itself, was one of a school of theorists who saw human creativity as part of a general process of adapting human lifeways to the planet and taking humans out of conflict with natural processes. See Gaia philosophy, deep ecology, ecology movement and Green movement for similar self-organizing ideals. (The connections between self-organisation and Gaia theory and the environmental movement are explored in A. Marshall, 2002, The Unity of Nature, Imperial College Press: London).